AI use in award entries – research by The Independent Awards Standards Council

How widespread is the practice of using generative AI as the primary writer of award entries?

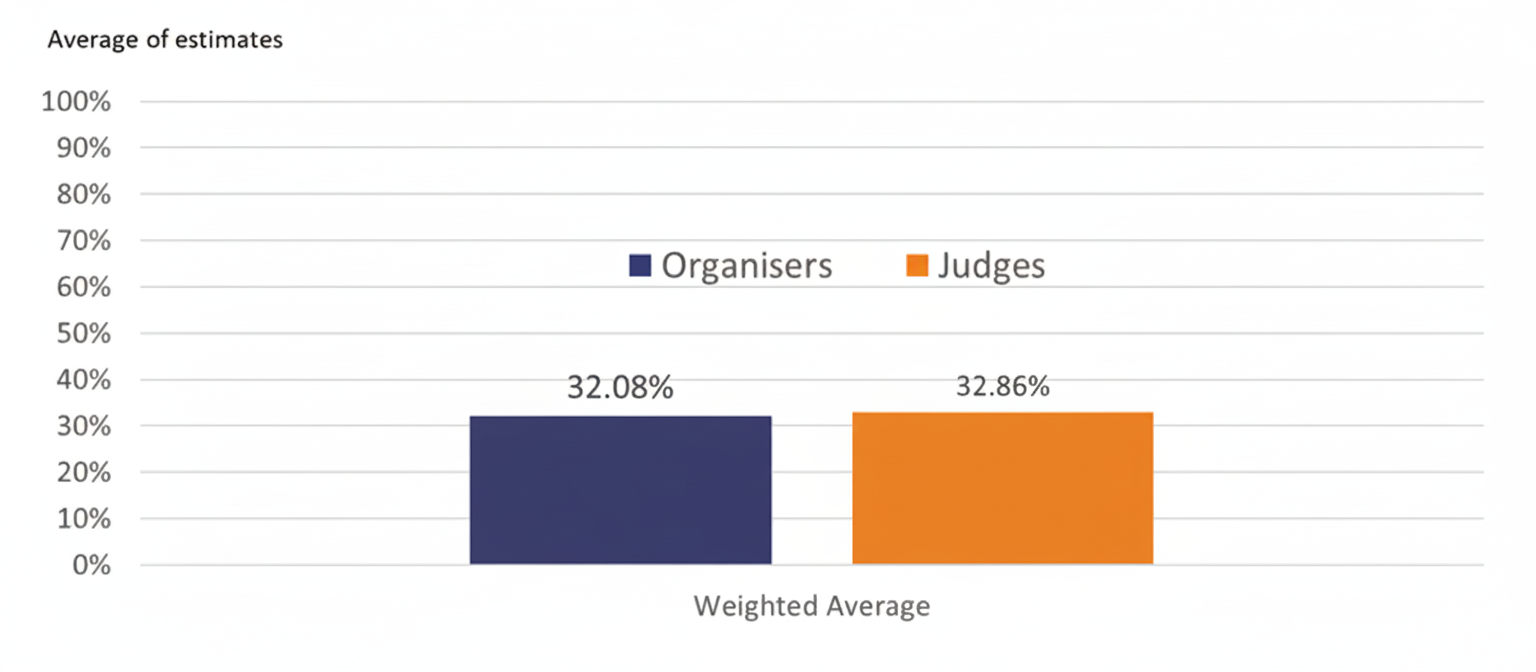

We asked awards organisers and awards judges: ‘What proportion of 2025 award entries appeared to be significantly AI-generated?’ Both estimated this figure to be about one-third. (This was arrived at by averaging the estimates of those confident enough to express an opinion.)

Judges and organisers both estimated that about one-third of the awards entries they saw in 2025 were AI-generated

This figure is likely to increase: 92% of judges and 100% of organisers agreed with the statement ‘I envisage more entries being AI-generated in the future’. (Judges and organisers were asked about the extent of AI usage in award entries last year: the average estimate was already one-third, and both parties already expected this figure to increase. However, the anticipated increase doesn’t appear to have transpired.)

Can judges spot a generative AI entry?

71% of judges agree with the statement: ‘I believe I could spot an entry which has been largely AI-generated’. Awards organisers appear slightly more cautious, with 57% agreeing: ‘I believe our awards judges could spot an entry which has been largely AI-generated’.

When we asked: ‘What do you think are the tell-tale signs of AI?’ judges pointed out several indicators, including:

- ‘Bland, generic-sounding copy. I tend to mark those down…’

- ‘Repetitive, common AI words.’

- ‘The language [used] and certain phrases: also the structure, broken into paragraphs and bullet points.’

- ‘The summary paragraph at the end is often a giveaway.’

- ‘Technical language written in perfect grammar, saying all the right things but with little impact.’

Do judges and organisers approve of using AI?

As we will explore later, most awards programmes have not yet formalised the extent to which they support or oppose generative AI usage in drafting entries. However, when we asked judges and organisers, they seemed torn:

- 58% of judges, and all awards organisers, agree: ‘I believe that using AI in the award entry process for procedures like interview transcription and content/data processing is acceptable’.

- However, fewer (50% of judges and 71% of awards organisers) agree with the statement: ‘I believe that using generative AI to draft the final award entry is acceptable’.

In the words of one awards judge, explaining why they approve of AI usage over PR agencies: ‘I think that a small business using AI to help them format and express themselves better is actually a good thing, as entries are often harder to read and judge without this! …I’d rather they used AI to help with their grammar and layout, and to answer the question fully, than used a PR company. As long as the actual content is good, I don’t mind this.’

Another judge articulated how to balance the two approaches above: ‘AI can be a useful tool in awards entries. It often helps candidates to ensure they’ve covered all the key elements of the questions they’re asked to answer, and that the answers are structured clearly. It’s important, however, that a person puts in the effort to place the original information into their draft, and also edits the entry so it maintains their tone of voice and passion for their business or project. Those using AI and putting very little effort in themselves will do poorly, as AI often makes mistakes and gets things wrong: but it is a valuable and helpful tool when used well.’

Should any action be taken?

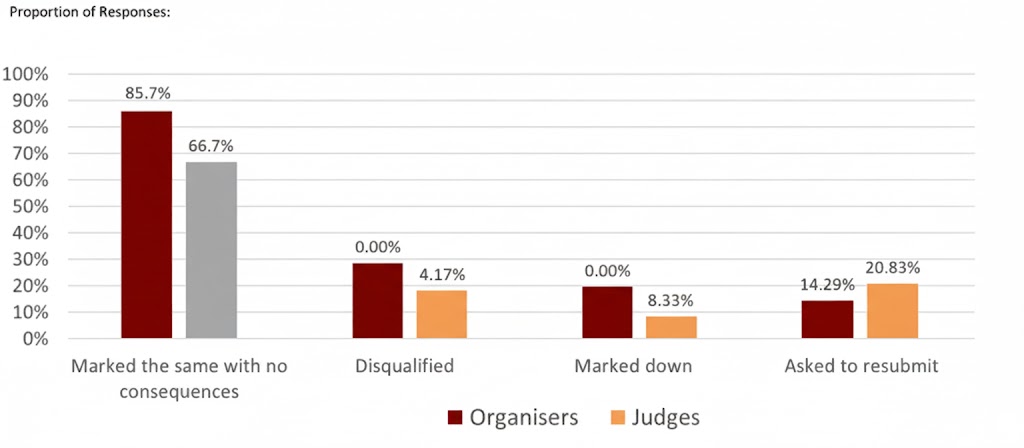

When we asked organisers and judges: ‘In your view, award entries which are substantially AI-generated should be…’ most supported taking no action. However, some strong opposing views were also expressed, as indicated in the graph below. Clearly, if you are entering an award which includes generative AI content, you would hope not to be assessed by one of the judges who thinks your entry should be disqualified!

Most judges and organisers do not think any action should be taken against generative AI entries, but a minority think such entries should be resubmitted or disqualified

Do judges think or act differently?

When judges are faced with a clearly generative AI-written award entry, is it impartially assessed, or does the very fact that it is generative AI make a difference to either the judges’ goodwill or scoring? Here, a narrow majority of judges will not let this affect either aspect; but a significant minority will. This is important, because most panels comprise multiple judges, so in any given judging panel it is therefore extremely likely that at least one will respond negatively to an overtly generative AI-written entry.

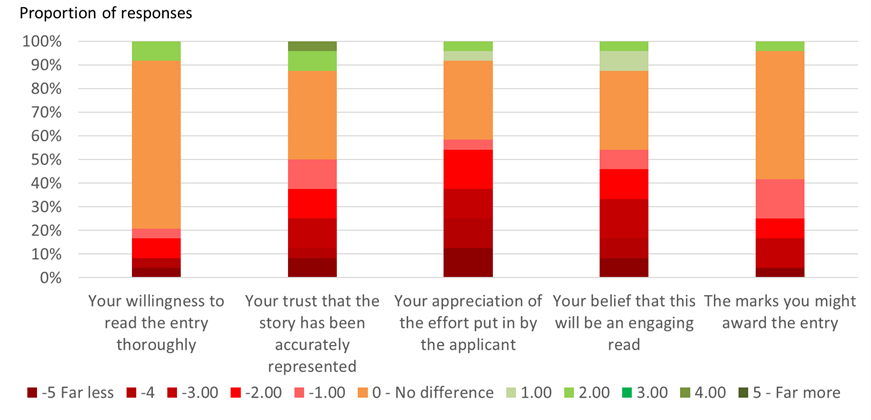

We asked: ‘When you suspect that an award entry has been mostly written using AI, does this have an effect upon any of the following?’ The results were revealing.

- Firstly, the impact on goodwill. When asked about their ‘appreciation of the effort put in by the applicant’, 58% said ‘less’, leading to 20% saying their ‘willingness to read the entry thoroughly’ is correspondingly ‘less’.

- In terms of their ‘trust that the story has been accurately represented’, 50% say ‘less’.

- Most consequentially, in terms of the marks they ‘might award the entry’, 42% say ‘less’.

We asked: ‘When you suspect that an award entry has been mostly written using AI, what effect does this have on the following?’ Although many responses are ambivalent (amber), a significant proportion of judges take a dim view and would react negatively (red).

Here follow judges’ comments from both sides of the fence. Firstly, a balanced but generally supportive view: ‘I wouldn’t consciously mark an entry down… However, the lack of impact in the writing would affect the overall impression. It would pack a lot less of a punch and miss an underlying authenticity of the applicant’s voice, which would probably lose the entry marks and make it less memorable.’

Now, a judge with a very strong anti-AI view: ‘Anyone using AI to generate an entrant’s report should be disqualified. The whole idea is to get authentic, passionate commentary in their entry. Even if an entrant is not as capable of [supplying] a perfectly-written entry, you could still pick out the real passion and authenticity of a personal entry.’

A decision therefore needs to be made by time-poor applicants: does the use of AI help you earn more marks than it might make you lose?

Should awards have a clear policy?

The most important thing awards organisers can do with the insight presented here is: write a clear policy, and share it with organisers and entrants proactively and visibly.

The good news is that the proportion of organisers saying ‘we had a rule/policy for applicants and judges in our most recent programme’ has increased from 13% in 2024 to 43% in 2025. Moreover, an additional 14% plan to publish one in the future.

The only issue here is a disconnect: the vast majority of judges either didn’t see any rules or policy in their 2025 judging, or didn’t remember it.

Let’s hope that the next time we run this research there will be even more clear policies, and more judges remembering them.

Filtering

Currently, only a very low number of awards organisers – 14% – filter AI-generated entries. However, given the conflicting views above, and the increasing number of awards platforms building AI filtering into their systems, awards organisers are faced with a dilemma: do they turn filtering on? Interestingly, 57% of organisers said they would (down slightly from 65% last year). From the responses, it looks like AI-generated entries detected by filtering wouldn’t result in disqualification, but extreme cases would more likely have to be resubmitted.

AI for judging

Another frontier for AI in awards is located on the other side of the table: namely, its use in judging. We explored this by asking organisers: ‘How likely is it that you will introduce some form of AI into your judging process?’ We were surprised at how much of an appetite there was here: About one-third said ‘likely’ or ‘very likely’, and 29% are already employing or planning to employ it.

Other considerations

In addition to the pros and cons we’ve already identified, a few other considerations are worth noting here:

- Two-stage processes: Let’s not forget that a number of awards have a second stage – a face-to-face element, requiring a person or team to be physically present. One respondent suggested that this stage might become more necessary with time: ‘We have two rounds of judging – desktop Round 1, and Round 2 – and in most categories, this will involve an onsite visit. I think this is a fair system, as there’s sometimes a difference between [the] written [entry] and on-the-ground reality.’

- International entries: As one respondent rightly pointed out, AI does help non-English speakers to apply for English-focused awards. It also helps those with dyslexia and writing challenges to compete on a more level playing field.

- The sustainability factor: One awards judge pointed out: ‘AI is energy-heavy and environmentally damaging. If for no other reason, then it must not be encouraged. However, I also accept that some people struggle with articulation, and [can see] why it would be attractive to such people, who are at a disadvantage when applying for awards.’

Conclusion

It would appear that the awards community is still very much torn on the topic of AI. One-third of entrants use it heavily, judges are split down the middle on it, and awards organisers are undecided. The key conclusion here is that in an environment where there is a lack of consensus, it’s up to the awards organisers to decide whether visibly AI-generated entries are welcome, and then communicate this clearly.

Even if such entries are deemed acceptable, the winners will ultimately be decided by panels of humans: and those accepting the awards will of course be humans also. The extent to which the bit in the middle is undertaken by robots is expected to increase, although – as we have seen when comparing this year’s findings with those from last year – less rapidly than we all expected.