AI use in award entries – research by The Independent Awards Standards Council

Insights from the awards industry about the use of AI

During October and November of 2024 members of the Independent Awards Standards Council used LinkedIn, personal connections and an email campaign to seek the views of awards judges and awards organisers into the increasingly important subject of award submissions being drafted using generative AI. Is it an acceptable evolution or is it bad practice that should be deterred?

The purpose of this research is to:

- Establish the current and projected prevalence of AI-generated award entries.

- Determine whether judges and organisers are generally in favour of it or against it.

- Provide quantitative and qualitative insight to help organisers clarify a position to those entering and judging their awards so there is alignment. This is absolutely vital to ensure every awards programme operates on a level playing field.

A comment from the survey picked up on this purpose: “The integration of AI in award submissions is indeed a complex and evolving topic, and it’s crucial to establish clear guidelines as AI technology becomes more prevalent.“

Who took part?

The research garnered 60 responses. 83.3% from Europe, 13.3% from APAC and 3.3% from the Americas.

Top level views

The main finding here was that there are very different and frequently opposing views on this subject from awards judges and awards organisers.

This report aims to communicate both perspectives and allow awards entrants, organisers and judges to make an informed choice as to what their approach should be.

To kick off, here are some of the comments for and against using generative AI when composing award entries:

In favour:

“A lot of awards entries are written in quite a generic, boring style which would be hard to tell apart from AI. But AI is evolving so rapidly, it would soon be almost impossible to tell anyway. And that’s fine.”

“I am a long-serving judge of numerous awards in the UK IT and Telecoms channel sector. In my experience and judgement, it is not the core submission that elevates entries into winners, but a combination of verifiable business impact and customer testimonials which are harder to generate with AI, so I don’t see a major threat here.”

“I think using AI generation for awards is fine as long as a human provides the original input and alters it to ensure it is accurate etc. It is no different from using a PR company really – just cheaper for the applicant.”

“We do not feel that [using] AI in regards to award nominations is a negative for the entry experience. As long as the data submitted in the entry is accurate, why is finding a less time consuming way to present the entry a bad thing?“

“Whether the actual words on an application form are produced by AI or not is irrelevant: what matters is the quality of the application, which is for (human!) judges to decide.”

“The plus side is that it may encourage more entries as it reduces the burden.”

Against:

“AI may have a place in the world but I find it insulting personally as I like to be communicated with by a real human, and to know effort has been used to create the award entry.”

“I think it’s lazy and takes away from the people who’ve spent the time writing their entries… I think people who’ve committed the time to putting their entries together should be prioritised over those who haven’t.”

“Currently, it seems most AI-generated copy isn’t as good as those well-written by humans, though that may change.”

“It’s a big issue – Our awards are very trades-focused, and it’s all about that unique job – AI cannot yet tell their story, so sometimes it almost dumbs down the entry, which is a shame… a lot of the time the entries that use [AI] just don’t make sense!”

Can judges spot AI-generated content, and do they care?

Of those who took part, 93% of judges had judged award entries in 2024 and the remainder had most recently judged awards in 2023; so their views were all current enough for the purposes of this research.

They were asked to score from 0 (none) to 10 (all) the “proportion of award entries appeared to be significantly AI-generated”. There was a wide distribution of scores, but the average was 3.5/10, suggesting that 35% of the award entries they judged were visibly and significantly AI-generated. This is a high figure, but given that the majority of award entries are written in-house by people who are busy with other jobs, it makes sense.

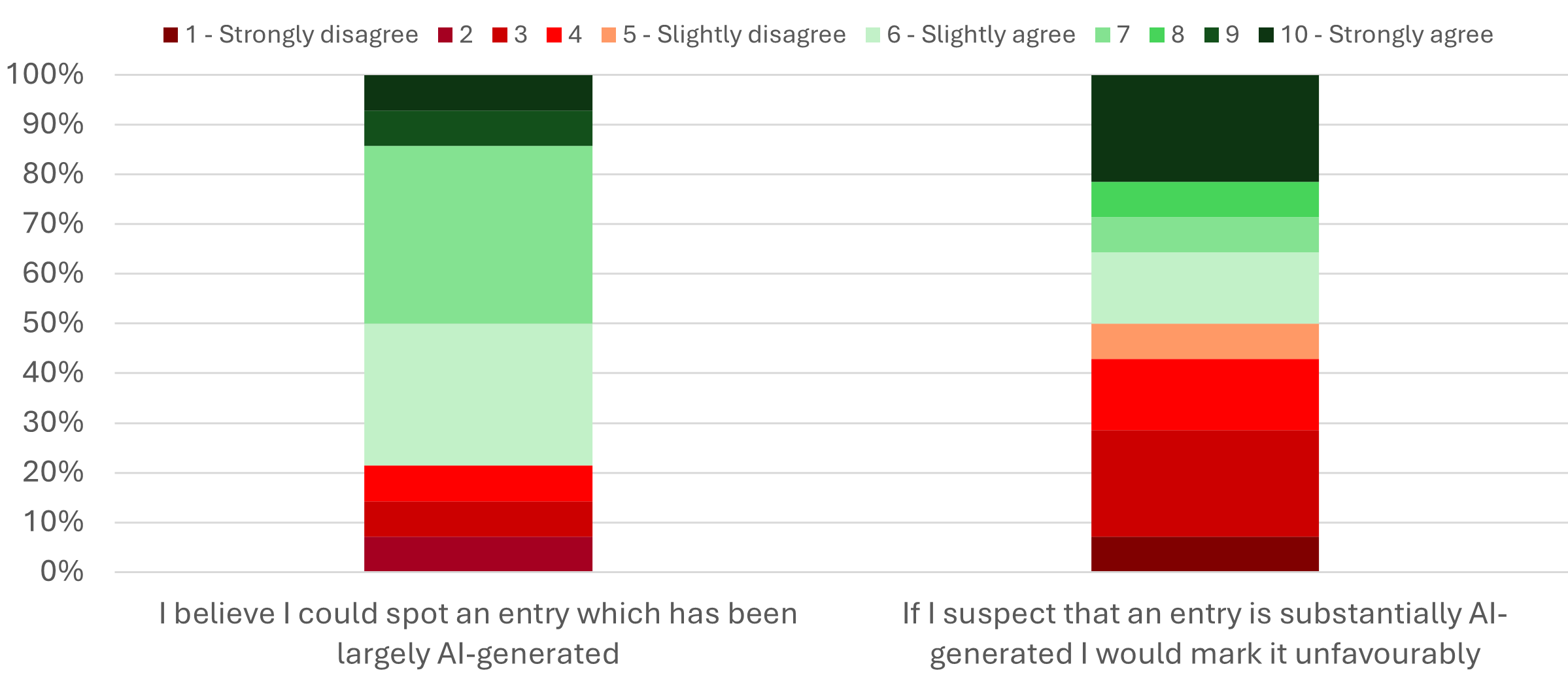

This research next sought to clarify whether, when it comes to AI-generated content, judges (a) noticed or (b) cared.

The answer to the first question is a clear “yes”. They can spot it. Here were some of their comments about the tell-tale signs:

“Corporate language used”

“…Can lack soul and a connection with the entrant”

“Generic and rather formulaic.”

“Lacked humour. And no errors.“

“Tone of Voice (insistent, over-eager, hyperbolic.)”

“The way things were constructed. It is always easy to spot PRs doing entries (all smoke and mirrors) and founders often miss important things out which are there really. There is something slick about the AI generated ones that you can kind of spot.”

But do they care? The answer here is more inconsistent. When a judge spots an AI-generated entry, even if they do not overtly mark down an entry due to the rules, judges are torn on whether to mark the entry down or not anyway. (50% would deliberately mark down clearly AI-generated entries.)

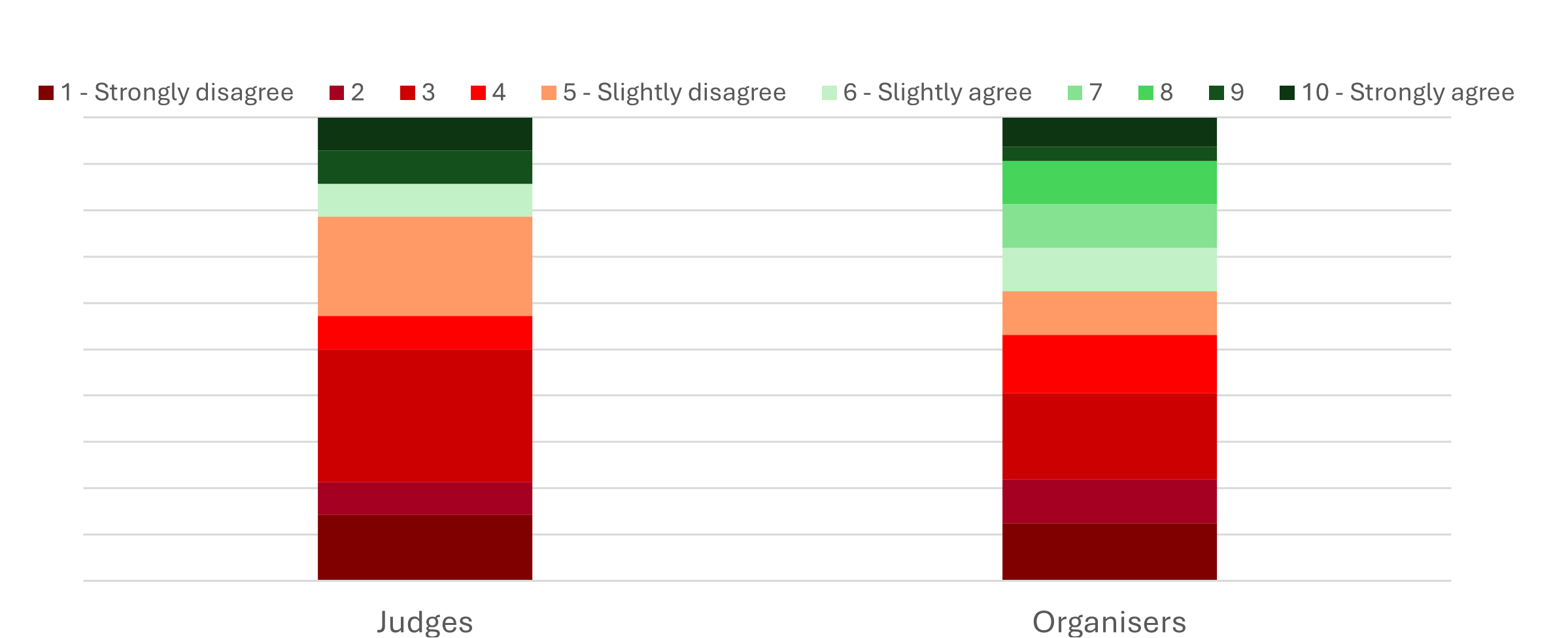

It is worth noting that 53.14% of organisers think their judges will mark down AI-generated entries: a similar figure, which shows a similar lack of consensus.

The comments suggest that it isn’t the act of using AI that they disapprove of, but the fact that AI often generates worse entries that they don’t enjoy reading and are marked down because they made judges endure the following:

“Non contextual information that has not been through a once-over by the entering team and replaced. “

“Inconsistency in tone, indicating that different questions had been fed in at different times.“

“Some answers were very fluent and grammatically perfect while others had errors strewn through them, indicating different authors.”

“Extensive use of cliches, waffle, lack of direct examples and evidence.”

“Generic responses, long sentences and a focus on definitions as opposed to what they actually did to meet the criteria.“

“…Also, AI can (currently) make simple mistakes that the human should review.”

Although there is disagreement on whether AI is a good or bad thing generally, or whether AI-written entries should be marked down or not as a matter of principle, there is clear consensus on the fact that awards should be given to those who deserve them, and the quality of the entry and its evidence are key here. AI, used well, can help speed up the process, but at the end of the day, the submission has to do the entrant justice – whether the information is assembled, structured and presented by AI or human. In the words of one judge “Many entries were AI-generated, but I did not mark them down if they answered the question and had supporting evidence.”

“If everyone used AI, I’m pretty certain that high quality entries would still stand out above the rest.”

The issue of trust

Regardless of policy – trust is a major concern: Only 21.4% of judges and 37.5% of organisers trust AI to provide an honest reflection of reality.

The statement put forward was “I trust generative AI to be able to accurately represent the reality of the story being entered” See graph below for results.

This pair of statistics is extremely important. Organisers need to trust the decisions of judges and be proud of the fact that the right entrants won. Equally, judges lean towards the entries they trust. Trust can be earned through strong evidence and compelling storytelling, but equally, it can be lost if the narrator isn’t believed. So if a judge believes ChatGPT or similar is the author of the entry, and has experienced the classic “hallucinating” of AI and other instances where it was completely wrong, then trust is eroded. A judge is unlikely to give an award to an entry she/he doesn’t trust, no matter how grammatically correct it is.

Who should declare it?

Organisers had a similar view to judges in terms of who should declare the use of generative AI in writing award entries. In responding to the survey they could tick multiple options here. 46.7% said the applicant, 25.0% said the organisers, 43.8% said the judges, and 18.8% said “It doesn’t matter, it shouldn’t be a factor in selecting the winner.”

As one organiser put it:

“Personally, I don’t really think having AI help with awards entries is an issue as judges usually judge them on the substance rather than the writing style, e.g. facts, figures, qualifiable achievements.”

Another organiser commented: “Entrants using AI should be transparent, detailing the extent of AI assistance.”

We also asked judges: “Whose job is it to spot award entries that are created by generative AI?” and they could tick as many options as they liked. Here, they were split. Half said: “The applicant should declare it”, and the others were divided between: “The organisers should use filters” (28.6%), “It is up to the judges” (35.7%), and “It doesn’t matter, it shouldn’t be a factor in selecting the winner” (28.6%).

Here were two comments by judges supporting disclosure:

“We could encourage applicants to disclose their use of AI and explain how it contributed to their work, adding transparency to the process.”

“I think we should ask for a declaration (was it used?) but include the entries.”

Should awards filter AI-generated content?

Do organisers already filter AI-generated content? Largely no. Less than a third do. 18.8% of organisers already use humans to filter AI-generated content, and 12.5% use technology.

But would they? The most dramatic statistic, and a rare consensus, was in the use of filtering. We asked “If your awards platform supported the filtering of AI-generated content, would you turn this feature on?” and 65.6% said “yes” with 15.6% undecided. Only 18.8% said “no”.

We asked what filtering techniques they used and the responses were:

- “Human being.”

- “We use tech and human eye – at the moment it’s fairly easy to spot, but will get harder as AI develops. We put the entry back into open- and closed-sourced to see what it kicks out back.”

- “Judges have been able to spot sections written by AI.”

- “GPTZero, Grammarly and Originality.AI.”

- “Put together by myself on my website.”

- “Moving to Evessio [an awards management platform] soon who I believe are adding AI into their tech.”

Should there be sanctions?

When it comes to the sanctions imposed when it is clear that an entry is largely AI-generated, awards organisers appear more strict than the judges. Although very few (just one response) suggest disqualification, 25.0% say it should be marked down, and 21.9% say applicants should be asked to resubmit.

One organiser explained their rationale for not imposing sanctions:

“We had this discussion at our judge’s sessions this year. Many business owners are great at what they do, but writing an award entry is not their strong point. I have no issues with entrants using AI to help them explain their points; many have been using Grammarly for years to check their grammar. It is about whether the question has been answered clearly and whether the evidence is supplied.”

Judges were offered a choice of official sanctions that they believe AI-generated entries should receive (if any), and the majority (85.7%) agreed that an AI-generated award entry should be ‘Marked the same with no consequences’. But the remainder opted for the other extreme of ‘disqualified’ with none opting for in between options like ‘marked down’ or ‘asked to resubmit’.

Is there a compromise?

Yes. There was a clear consensus.

Using AI in the process is widely accepted. There are things that humans clearly do better:

- Apply experience to pick which stories enter which categories of which awards.

- Apply business knowledge and awards expertise to make decisions on angle, scope, timeframe, who to interview, voice, tone, evidence needed, category to choose etc. etc.

- Interview other people for their input.

And clearly there are things that AI can help with:

- Wading through reams of text and cherry-picking relevant bits.

- Analysing data (but beware of its inability to understand context).

- Summarising (although it often misses out key statistics, and it might not make the same choices as you regarding what to include and what to ignore).

There were some interesting comments by organisers on this subject:

“AI is fine as long as it is a productivity tool and does not replace the human. It should be taking the robot out of the human.”

“We recognise that AI can be useful for things like fine-tuning grammar or even helping to brainstorm ideas: tools like ChatGPT can certainly support that. But we also believe that entries should show genuine effort and creativity, so they shouldn’t be entirely AI-written.”

“… there are accessibility considerations for those who might not have a marketing department or someone to help them write (although I’d rather a list of bullet points and rough round the edges entries that are human, than an AI polish that doesn’t reflect who a person really is).”

“I think AI-generated entry copy is ok as long as it’s reviewed by the entrant to be truthful to the work submitted, and not presenting misinformation.”

“It makes no difference if people use AI to answer the question. It has to make sense and be backed up.”

Judges also comment:

“AI can help … those with little time, experience and resources to compete against those that do. Plus, it speeds up time meaning more entries.”

“It’s inevitable that entrants will use GenAI to create and refine their entries. We would be emulating King Canute if we try to stop it and indeed, if it improves the quality of entries, why would we? Some of the entries are poorly written, but great projects. If using some additional help, either automated or human, improves quality, it’s a good thing.”

Two judges made interesting suggestions as to how awards might evolve in response:

- “I think award questions in future will need to be modified to promote greater creativity and personal input, which may only be able to come from the writer’s personal knowledge.”

- “If we need to, we could include a personal presentation/ Q&A as part of the process (or other human elements).”

- “It should always be checked if using AI to make sure it reads well, makes sense, and gets the points across.”

The importance of clarity and transparency

Clearly policies on AI-generated content are already in place or being considered by over half of awards represented by the respondents. So, a practice that is still in its infancy. 12.5% of awards organisers said: “Yes – we had a rule in our most recent programme”, 21.9% said: “Yes – we will probably have a rule in our next programme”, and a further 18.8% ticked: “Yes – we will probably have a rule sometime in the future”.

Only 28.1% of awards organisers ticked: “No, we do not have a rule and have no plans to introduce one”. The remainder were undecided.

Examples of rules already in place are:

“We will probably discourage the use of AI in entries but not penalise those who do use it.”

“We will declare we use [a] tool to check, so they need to declare if [AI is] used and where. Certain AI content will be accepted.”

“Our rule is still to be discussed but it’s tricky because we have international entrants with English not as a first language, who may use AI to help them. But then, do we trust that it is accurately representing them? And then we have entrants who are English speakers and clearly using it to save time – but this has unsatisfactory results too.”

“We have told all of our judges they cannot use AI to review nominations.”

“We use AI to assist entrants who are short on time and guide them on how to do so.”

“…It was in our criteria outlined on the website – if entrants use AI to write the entry, it better be correct and answer with [the] depth of information needed for each question. We need correct answers: whether they are written by humans or AI, no matter, but humans better check what they enter or they will not qualify as finalists.”

There were two interesting comments by organisers on this subject:

“There should be clear guidance on what is acceptable in the entry criteria and guidelines. This should then dictate the process from there in terms of how AI entries are treated. Ultimately, entries should be judged on impact and how they’ve met criteria. It’s about the project, campaign and achievements rather than the ability to write. My guess is AI will help the mapping to criteria but not the data points so they are likely to be marked down. However, extra guidance should be given to judges about what to look out for, especially if an entry is saying a lot of the right things but doesn’t have substance.”

“Clear guidelines for judges are essential to ensure fairness, as attitudes toward AI use can differ widely among them. Without these guidelines, judges who are sceptical of AI may downvote AI-generated content, or even content they only suspect involves AI, potentially penalising creators based on subjective perceptions rather than actual use. Robust rules can ensure fairness and celebrate both traditional and AI-enhanced creativity in video content.”

“A balanced policy could require applicants to clarify the extent of AI use in their entries, empowering judges to assess both the human input and the AI’s contribution. This could be supplemented with guidelines that distinguish between AI-generated content and human effort, making it easier for judges to evaluate each entry on its intended merit.”

“If AI-generated entries are a concern, establishing a clear policy on AI use will help ensure fairness and transparency.”

Clearly there is no one-size-fits-all for policies. As one judge commented: “From an organiser’s perspective, I believe that whether AI-generated content should be accepted largely depends on the nature and purpose of the award. If the award aims to recognise creative originality, skill, or personal effort, allowing AI-generated content might compromise the spirit of the competition. For awards where individual insights and unique human experiences are essential, I would lean towards discouraging or limiting AI-assisted content. However, if the award is focused on innovation, technological advancement, or adaptability, allowing AI could actually enhance the quality and depth of entries in such cases.”

Each award should make an informed judgement, maybe following a meeting with lead judges, and decide the policy that all entrants and judges should adhere to, doing their utmost to ensure that everyone reads this prior to writing and judging.

Concluding comment

As the Independent Award Standards Council, our role is to share best practice and maintain trust in the awards industry – and ethics and transparency are vital here. Our conclusion is that the most pressing action off the back of this research is that organisers need to act as decision-makers and leaders within their programmes. They should factor in the need to lower barriers of inclusion in awards while ensuring that judges trust the content they have to judge. A difficult balance to strike. Ultimately it will be up to the organisers to make a judgement call on their specific programme and write this up as a clear, unambiguous policy that entrants and judges can buy into and apply.

Let’s all work together to ensure that business awards remain of value to those who apply, and are trusted by those who see the announcements, trophies and logos in the media.